Qualify the Right Participants: A Guide to Better Screener Questions

Published: July 2, 2025

The success of user research studies hinges on getting the right participants. But often, the first hurdle researchers face is the screener questionnaire itself. Poorly worded questions can lead to inaccurate data, frustrated participants, and ultimately, wasted time and resources.

It's not just about filtering people out, it's about accurately identifying those who genuinely fit the criteria and will provide valuable insights.

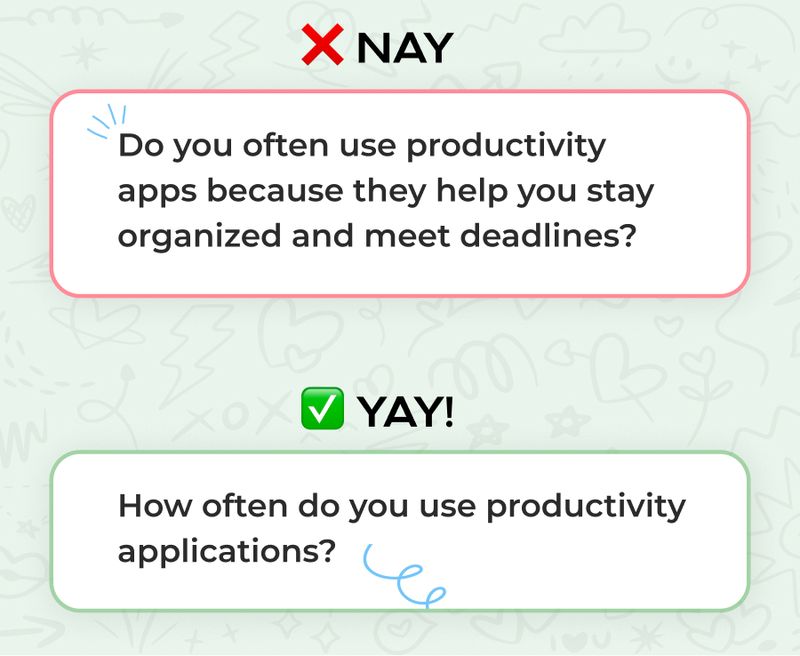

1. Leading Questions

Your screener's job is to figure out if someone fits, not to tell them how to fit. If your question gives away the "right" answer, you're not getting genuine responses; you're just getting people who want to qualify.

Here’s a real-life screener question that practically winks at the participant (we won't name names):

"Do you often use productivity apps because they help you stay organized and meet deadlines?"

Below it, a 10-second rewrite. Which one is going to get a more honest participant?

Rewritten: "How often do you use productivity applications (e.g., to-do lists, calendar apps, note-taking tools)?"

- Never

- Rarely (less than once a month)

- Sometimes (1-2 times a month)

- Frequently (3-4 times a month)

- Daily

2. Double-Barreled Questions

This is where you ask two things in one go, and the participant can't possibly give a single, accurate answer. It's like asking someone if they like apples and oranges at the same time. What if they love one and hate the other?

"Are you a small business owner who uses cloud-based accounting software for your business operations?"

Why this is a nightmare: Someone might be a small business owner but not use cloud-based accounting software, or they might use cloud-based accounting software but not be a small business owner. You're left with muddy data and no idea if they actually meet your criteria.

Better Alternative (Split into two precise questions):

Question A: "Which of the following best describes your primary occupation?"

- Small business owner

- Employee at a small business

- Employee at a large corporation

- Not currently employed

- Other (please specify)

Question B: "Do you currently use cloud-based accounting software for your business operations?"

- Yes

- No

- Not applicable (I don't own a business)

By splitting it, you can easily qualify participants who are both small business owners and actively using cloud accounting software. No more guesswork!

3. Ambiguous Questions

If a question isn't crystal clear, participants will interpret it differently, giving you inconsistent data.

Poor Example: "Do you often use social media?"

Why it's bad: "Often" is subjective. Does it mean once a day, once a week, or constantly? This lack of specificity makes it impossible to accurately compare responses or filter effectively.

Better Alternative: "How many hours per day, on average, do you spend on social media platforms?"

- Less than 1 hour

- 1-3 hours

- More than 3 hours

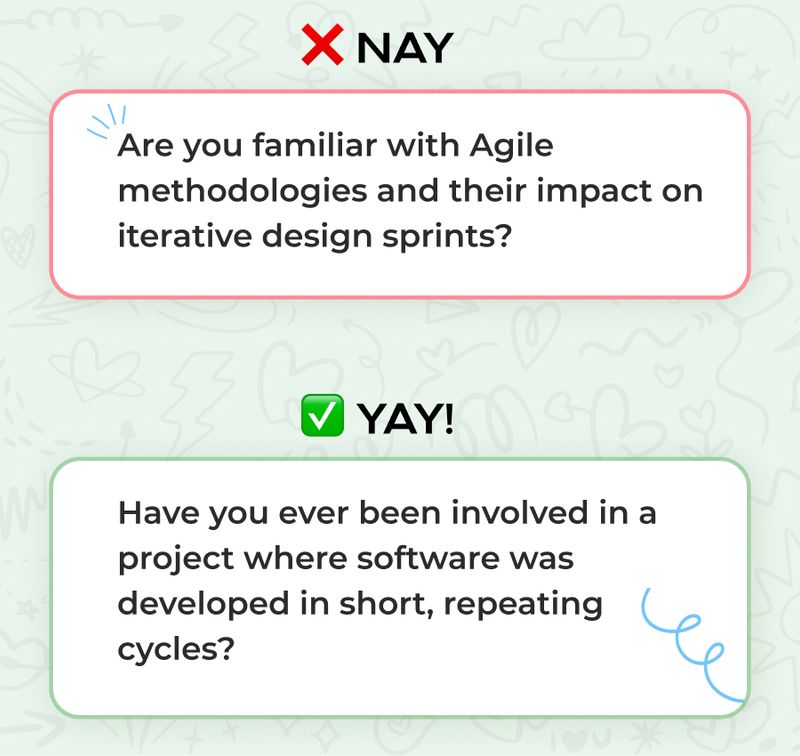

4. Jargon-Filled Questions

Using technical terms or industry-specific lingo in screeners can confuse participants and lead to inaccurate self-selection.

Poor Example: "Are you familiar with Agile methodologies and their impact on iterative design sprints?"

Why it's bad: Unless specifically recruiting experienced software developers or product managers, many general users won't understand these terms, leading to confusion or random answers just to get through the screener.

Better Alternative: "Have you ever been involved in a project where software was developed in short, repeating cycles?" (You can follow up with clarifying questions during the actual study.)

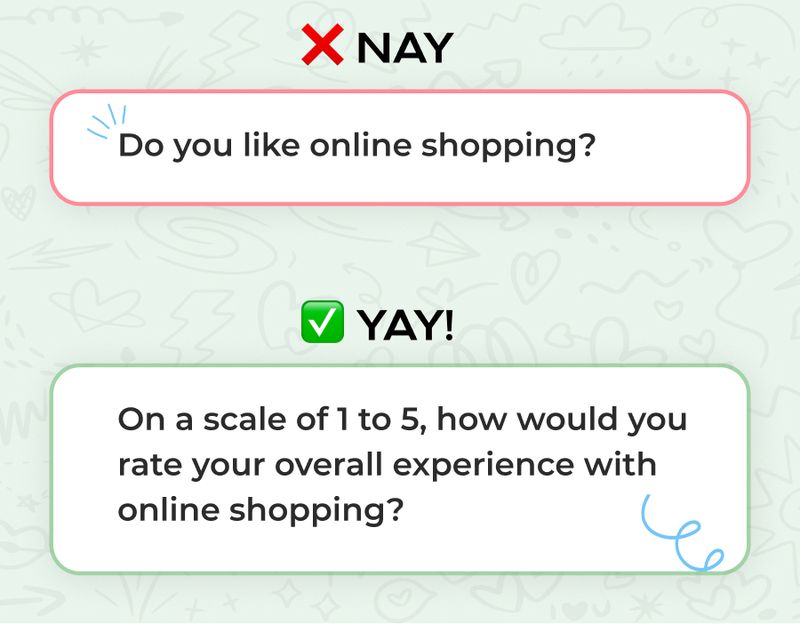

5. Yes/No Questions When Nuance Is Needed

Sometimes a simple yes or no doesn't capture the full picture, especially when you need to understand degrees of experience or preference for qualification.

Poor Example: "Do you like online shopping?"

Why it's bad: This is too simplistic. A participant might like online shopping for some items but not others, or only from certain retailers. It doesn't capture the spectrum of their experience, which might be crucial for your study.

Better Alternative: "On a scale of 1 to 5, where 1 is 'strongly dislike' and 5 is 'strongly like', how would you rate your overall experience with online shopping?" (Consider adding an optional open-text box for elaboration, but keep it optional for a screener.)

Your screener is the gatekeeper to valuable insights. Taking the time to craft clear, unbiased, and specific questions will significantly improve the quality of your participant pool and, by extension, your research findings.